pacman::p_load(sf, sfdep, tmap, tidyverse, knitr, readxl)inclass_Ex02

Introduction to In Class Ex 02

Spatial Randomness

Spatial randomness assumption in geospatial analysis - assuming that it is randomly distributed across areas.

The assumption of spatial randomness states that the values of a variable at different locations in a spatial dataset are independent of each other, and there is no systematic spatial pattern or trend.

Key aspects of the spatial randomness assumption:

Independence of Observations: The assumption implies that the value of a variable at one location does not provide information about the values at neighboring locations. Each observation is considered to be independent of its spatial neighbors.

Homogeneity: The spatial randomness assumption assumes homogeneity or stationarity across the study area. This means that the statistical properties of the variable (mean, variance, etc.) do not vary systematically across space.

Random Spatial Distribution: There is no discernible pattern or structure in the spatial distribution of the variable. The distribution of values across space appears random and lacks systematic trends.

Isotropy: Isotropy refers to the idea that the spatial process is similar in all directions. In other words, the behavior of the variable is consistent regardless of the direction of measurement.

It’s important to note that the spatial randomness assumption may not hold in many real-world situations. Spatial autocorrelation, where values at nearby locations are correlated, is a violation of spatial randomness. Detecting and understanding spatial patterns and dependencies is a common goal in spatial analysis.

If spatial randomness is assumed, methods like global spatial autocorrelation tests (e.g., Moran’s I) and certain types of spatial regression models may be appropriate. However, when spatial patterns exist, additional spatial analysis techniques are needed to account for and model the spatial dependencies in the data.

Spatial Inequality

Spatial inequality refers to the unequal distribution of resources, opportunities, wealth, or development across different geographic areas. This form of inequality is characterized by disparities in economic, social, and environmental conditions between regions, cities, or neighborhoods. Spatial inequality can manifest in various ways and impact people’s quality of life, access to services, and overall well-being. Here are some key aspects of spatial inequality:

Economic Disparities:

- Income and Wealth: Spatial inequality often results in variations in income levels and wealth accumulation between different regions. Some areas may experience economic growth and prosperity, while others face economic decline and poverty.

Access to Services:

Education: Disparities in the quality of education and educational resources between regions can lead to differences in opportunities and outcomes for residents.

Healthcare: Unequal access to healthcare facilities and services can contribute to health disparities between spatial areas.

Infrastructure: Disparities in infrastructure development, such as transportation, utilities, and communication networks, can affect the overall development and connectivity of regions.

Employment Opportunities:

- Job Markets: Variations in the availability of employment opportunities and industries can lead to uneven economic development and employment rates across regions.

Housing and Living Conditions:

Housing Affordability: Spatial inequality may result in differences in housing costs and affordability, impacting the living conditions of residents.

Urban-Rural Divide: Disparities between urban and rural areas can contribute to spatial inequality, with urban areas often experiencing more significant economic development.

Social and Environmental Factors:

Social Services: Access to social services, cultural amenities, and recreational facilities can vary between regions.

Environmental Justice: Spatial inequality may be associated with environmental disparities, such as unequal exposure to pollution or access to green spaces.

Policy and Governance:

- Government Policies: Historical and contemporary government policies can contribute to or alleviate spatial inequality. Policies related to taxation, infrastructure investment, and social programs can influence regional disparities.

Addressing spatial inequality often requires targeted policies and interventions to promote more equitable development, improve access to opportunities, and enhance the well-being of residents in marginalized areas. Spatial planning, regional development strategies, and inclusive policies are crucial tools in mitigating spatial inequalities and fostering sustainable and balanced growth.

Spatial Weights

Spatial weights are a fundamental concept in spatial analysis and spatial statistics. They represent the spatial relationships between different locations or observations in a geographic space. Spatial weights are used to quantify the influence or connectivity between spatial units, and they play a crucial role in various spatial analysis techniques. Here are some key points about spatial weights:

Typically two types - adjacency weight or based on distance.

Definition:

- Spatial weights define the degree of influence or proximity between pairs of spatial units. They create a matrix that captures the spatial relationships in a dataset.

Types of Spatial Weights:

Binary / Adjacent Weights: Indicate whether spatial units are neighbors (1 for neighbors, 0 for non-neighbors). Can be based on whether adjacent or based on distance (e.g. within distance = 1)

Distance-Based Weights: Reflect the inverse of the distance between spatial units.

Contiguity Weights: Indicate whether spatial units share a common boundary or vertex.

Common Approaches to Define Spatial Weights:

k-Nearest Neighbors (kNN): Assign weights based on the k nearest neighbors for each spatial unit.

Distance Bands: Assign weights based on a threshold distance, considering units within the specified distance as neighbors.

Queen’s Contiguity: Assign weights of 1 to units that share a common boundary or vertex.

Rook’s Contiguity: Similar to Queen’s, but considers only shared edges, not vertices.

Spatial Weights Matrices:

- Spatial weights are often represented as matrices. A spatial weights matrix (W) is a square matrix where each element (Wij) represents the weight between spatial units i and j.

Applications:

Spatial Autocorrelation: Used in measures like Moran’s I to assess the degree of spatial clustering or dispersion of values.

Spatial Regression: Applied in spatial econometrics to model spatial dependencies in regression analyses.

Spatial Interpolation: Utilized in methods like kriging for spatial prediction.

Lag

Lag 2 neighbours will cover both Lag 1 and Lag 2 neighbours.

Tobler’s First Law of Geography

Tobler’s First law of Geography Everything is related to everything else, but near things are more related than distant things.

Two important concepts

Spatial Dependency - trying to find oil? where to dig? by using calculations

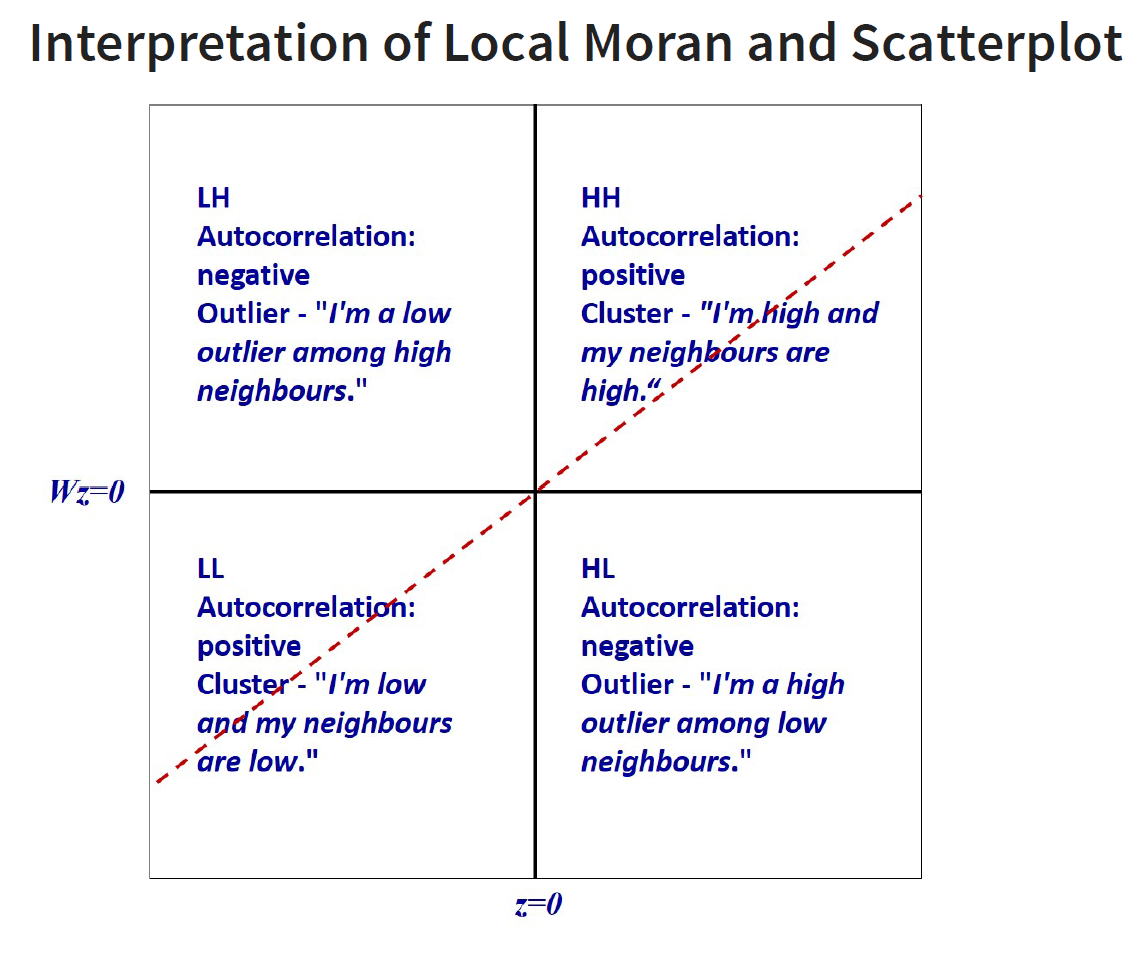

Spatial Autocorrelation - whether there is cluster or not; reject the null hypothesis that there is spatial randomness - can be positive and negative autocorrelation

Positive - you see lumps, clusters (high-high, low,-low)

Negative - you see checker boxes, outliers (low-high, high-low)

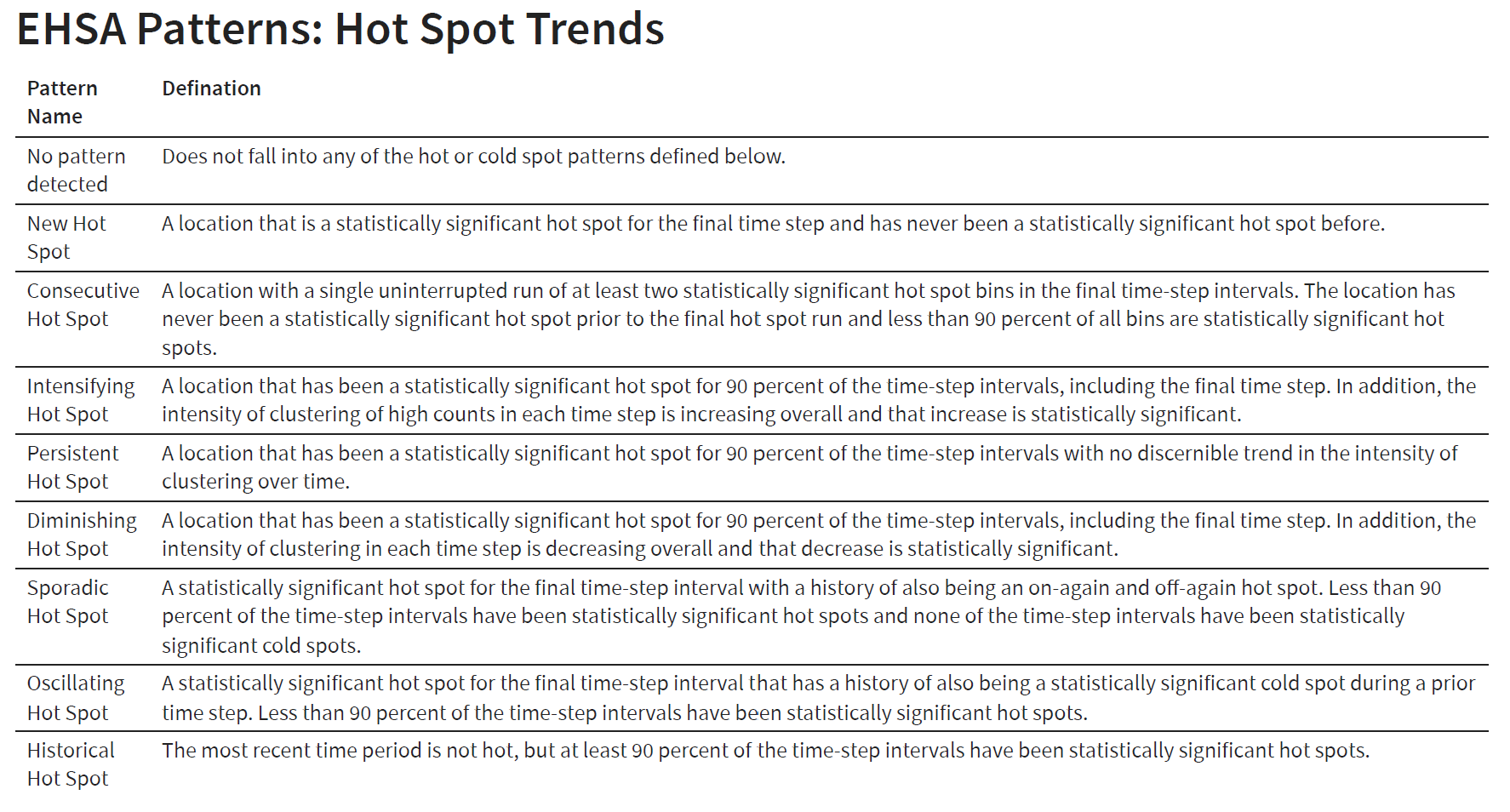

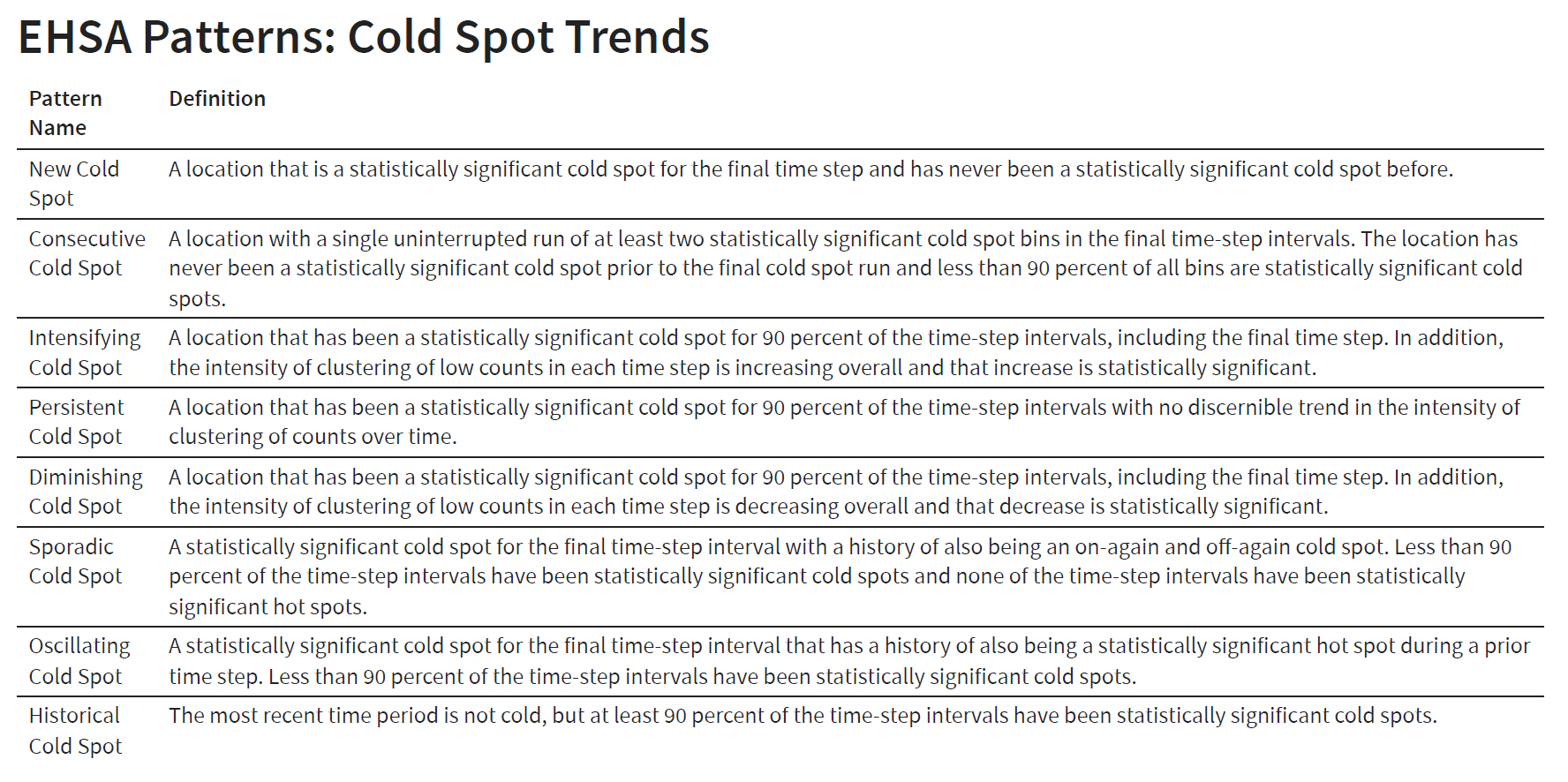

Time Series - Emerging Hot Spot Analysis (EHSA)

Mann-Kendall Test

The Mann-Kendall statistical test for trend is used to assess whether a set of data values is increasing over time or decreasing over time, and whether the trend in either direction is statistically significant.

It is a non-parametric test, which means it works for all distributions (i.e. your data doesn’t have to meet the assumption of normality), but your data should have no serial correlation. If your data does follow a normal distribution, you can run simple linear regression instead. Refer to this article for a full tutorial of how to perform Mann-Kendall test manually.

Your data isn’t collected seasonally (e.g. only during the summer and winter months), because the test won’t work if alternating upward and downward trends exist in the data. Another test—the Seasonal Kendall Test—is generally used for seasonally collected data. Your data does not have any covariates—other factors that could influence your data other than the ones you’re plotting. You have only one data point per time period. If you have multiple points, use the median value.

ESHA - It combines the traditional ESDA technique of hot spot analysis using the Getis-Ord Gi* statistic with the traditional time-series Mann-Kendall test for monotonic trends. The goal of EHSA is to evaluate how hot and cold spots are changing over time. It helps us answer the questions: are they becoming increasingly hotter, are they cooling down, or are they staying the same?

Ex for In Class

Sfdep Package

Focusing on sfdep - note that it is sf - a package built on spdep.

Loading the Packages

New package for this exercise - sfdep

sfdep builds on the great shoulders of spdep package for spatial dependence. sfdep creates an sf and tidyverse friendly interface to the package as well as introduces new functionality that is not present in spdep. sfdep utilizes list columns extensively to make this interface possible.

Getting the data ready

First - we will import the data.

We will start with the geospatial data - Hunan county, which is in ESRI shapefile format.

hunan <- st_read(dsn = "data/geospatial",

layer = "Hunan")Reading layer `Hunan' from data source

`C:\zjjgithubb\ISSS624\InClassEx\Ex02\data\geospatial' using driver `ESRI Shapefile'

Simple feature collection with 88 features and 7 fields

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: 108.7831 ymin: 24.6342 xmax: 114.2544 ymax: 30.12812

Geodetic CRS: WGS 84Next, we will import the aspatial data using the readr package.

hunan2012 <- read_csv("data/aspatial/Hunan_2012.csv")Hunan_GDP contains the GDP by County from 2004 to 2021.

HunanGDP <- read_xlsx("data/aspatial/Hunan_GDP.xlsx")Joining the Data

We will update the attribute table update the attribute table of hunan’s SpatialPolygonsDataFrame with the attribute fields of hunan2012 dataframe. This is performed by using left_join() of dplyr package.

Left join to retain the geospatial data (geometry data) - left hand side is hunan, and then i append the rest onto it.

hunan_GDPPC <- left_join(hunan, hunan2012) %>%

select(1:4, 7, 15)

#1:4 - Col 1 to 4

#7 - County

#15 - GDPPC

#Tidyverse - because its geospatial data, it will auto keep the geometry data - without the need to specify The select function is applied to the result of the left_join(hunan, hunan2012) operation. In other words, it is applied to the data frame that is the output of the left join.

In the context of the left join:

Columns 1 through 4 (

1:4) refer to columns 1 through 4 from the result of the left join.Column 7 (

7) refers to column 7 from the result of the left join.Column 15 (

15) refers to column 15 from the result of the left join.

Therefore, the columns selected by select are from the data frame that results from the left join operation. If there are columns with the same name in both hunan and hunan2012 data frames, the column names will be disambiguated in the result, typically with suffixes like _x for the left data frame and _y for the right data frame.

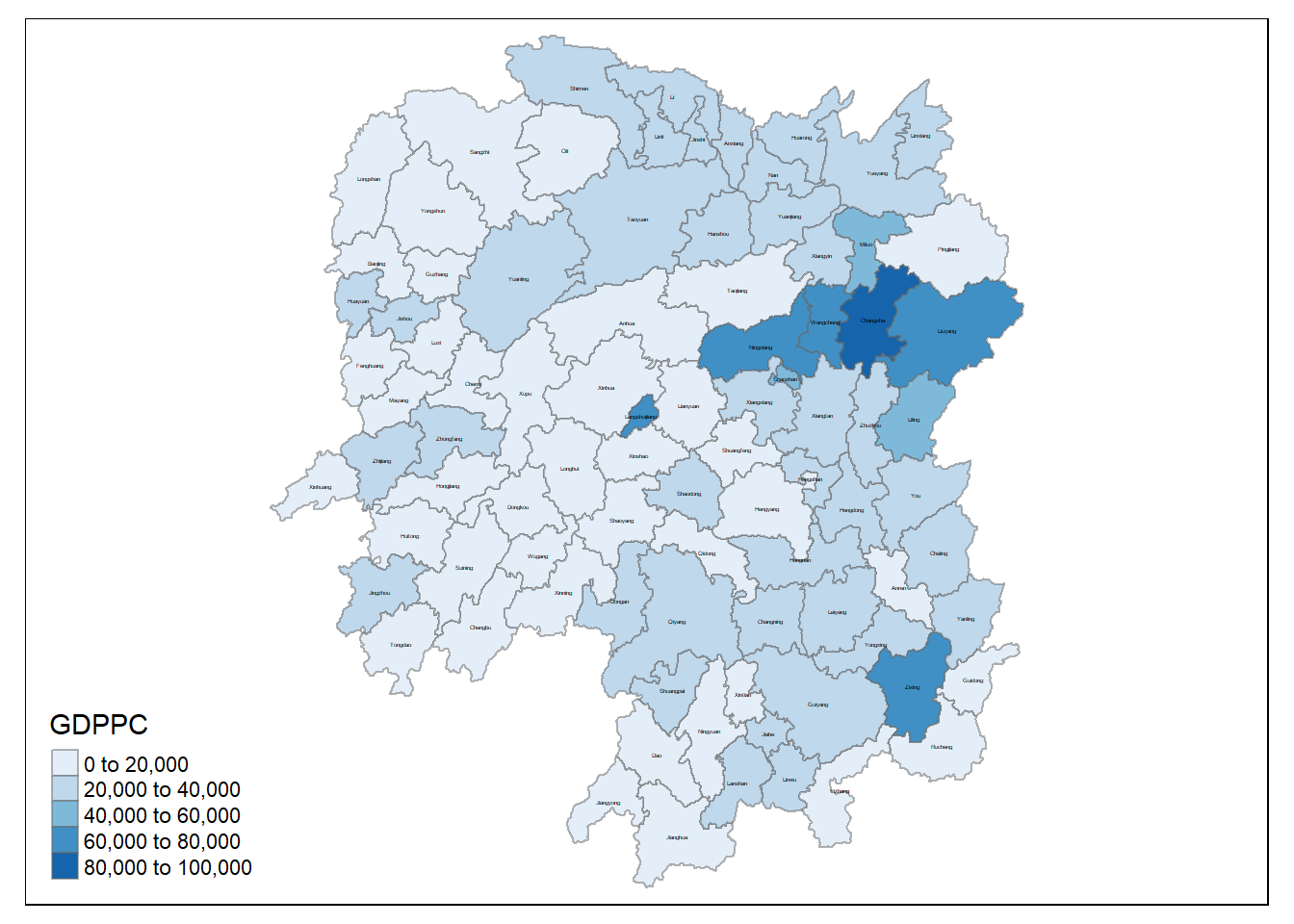

Visualising the Data - GDPPC

We will prepare a base map and a choropleth map showing the distribution of GDPPC 2012.

Hunan_GDPPCmap <- tm_shape(hunan_GDPPC) +

tm_fill(col = "GDPPC",

style = "pretty",

palette="Blues",

title = "GDPPC") +

tm_text("County", size = 0.2, col = "black") +

tm_borders(alpha = 0.5)

tmap_arrange(Hunan_GDPPCmap)

Global Measures of Spatial Association

sfdep utilizes list objects for both neighbors and weights. The neighbors and weights lists.

To identify contiguity-based neighbors, we use st_contiguity() on the sf geometry column. And to calculate the weights from the neighbors list, we use st_weights() on the resultant neighbors list. By convention these are typically called nb and wt.

These lists can be created line by line or within a pipe. The most common usecase is likely via a dplyr pipeline.

Identifying contiguity neighbours: Queen’s method

In the code chunk below st_contiguity() is used to derive a contiguity neighbour list by using Queen’s method.

nb_queen <- hunan_GDPPC %>%

mutate(nb = st_contiguity(geometry),

.before = 1)By default, queen argument is TRUE. If you do not specify queen = FALSE, this function will return a list of first order neighbours by using the Queen criteria. Rooks method will be used to identify the first order neighbour if queen = FALSE is used.

The code chunk below is used to print the summary of the first lag neighbour list (i.e. nb) .

summary(nb_queen$nb)Neighbour list object:

Number of regions: 88

Number of nonzero links: 448

Percentage nonzero weights: 5.785124

Average number of links: 5.090909

Link number distribution:

1 2 3 4 5 6 7 8 9 11

2 2 12 16 24 14 11 4 2 1

2 least connected regions:

30 65 with 1 link

1 most connected region:

85 with 11 linksThe summary report above shows that there are 88 area units in Hunan province. The most connected area unit has 11 neighbours. There are two are units with only one neighbour.

To view the content of the data table, you can either display the output data frame on RStudio data viewer or by printing out the first ten records by using the code chunk below.

kable(head(nb_queen,

n=10))| nb | NAME_2 | ID_3 | NAME_3 | ENGTYPE_3 | County | GDPPC | geometry |

|---|---|---|---|---|---|---|---|

| 2, 3, 4, 57, 85 | Changde | 21098 | Anxiang | County | Anxiang | 23667 | POLYGON ((112.0625 29.75523… |

| 1, 57, 58, 78, 85 | Changde | 21100 | Hanshou | County | Hanshou | 20981 | POLYGON ((112.2288 29.11684… |

| 1, 4, 5, 85 | Changde | 21101 | Jinshi | County City | Jinshi | 34592 | POLYGON ((111.8927 29.6013,… |

| 1, 3, 5, 6 | Changde | 21102 | Li | County | Li | 24473 | POLYGON ((111.3731 29.94649… |

| 3, 4, 6, 85 | Changde | 21103 | Linli | County | Linli | 25554 | POLYGON ((111.6324 29.76288… |

| 4, 5, 69, 75, 85 | Changde | 21104 | Shimen | County | Shimen | 27137 | POLYGON ((110.8825 30.11675… |

| 67, 71, 74, 84 | Changsha | 21109 | Liuyang | County City | Liuyang | 63118 | POLYGON ((113.9905 28.5682,… |

| 9, 46, 47, 56, 78, 80, 86 | Changsha | 21110 | Ningxiang | County | Ningxiang | 62202 | POLYGON ((112.7181 28.38299… |

| 8, 66, 68, 78, 84, 86 | Changsha | 21111 | Wangcheng | County | Wangcheng | 70666 | POLYGON ((112.7914 28.52688… |

| 16, 17, 19, 20, 22, 70, 72, 73 | Chenzhou | 21112 | Anren | County | Anren | 12761 | POLYGON ((113.1757 26.82734… |

Identify contiguity neighbours: Rooks’ method

nb_rook <- hunan_GDPPC %>%

mutate(nb = st_contiguity(geometry,

queen = FALSE),

.before = 1)Identifying higher order neighbors

There are times that we need to identify high order contiguity neighbours. To accomplish the task, st_nb_lag_cumul() should be used as shown in the code chunk below.

nb2_queen <- hunan_GDPPC %>%

mutate(nb = st_contiguity(geometry),

nb2 = st_nb_lag_cumul(nb, 2),

.before = 1)

nb2_queenSimple feature collection with 88 features and 8 fields

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: 108.7831 ymin: 24.6342 xmax: 114.2544 ymax: 30.12812

Geodetic CRS: WGS 84

First 10 features:

nb

1 2, 3, 4, 57, 85

2 1, 57, 58, 78, 85

3 1, 4, 5, 85

4 1, 3, 5, 6

5 3, 4, 6, 85

6 4, 5, 69, 75, 85

7 67, 71, 74, 84

8 9, 46, 47, 56, 78, 80, 86

9 8, 66, 68, 78, 84, 86

10 16, 17, 19, 20, 22, 70, 72, 73

nb2

1 2, 3, 4, 5, 6, 32, 56, 57, 58, 64, 69, 75, 76, 78, 85

2 1, 3, 4, 5, 6, 8, 9, 32, 56, 57, 58, 64, 68, 69, 75, 76, 78, 85

3 1, 2, 4, 5, 6, 32, 56, 57, 69, 75, 78, 85

4 1, 2, 3, 5, 6, 57, 69, 75, 85

5 1, 2, 3, 4, 6, 32, 56, 57, 69, 75, 78, 85

6 1, 2, 3, 4, 5, 32, 53, 55, 56, 57, 69, 75, 78, 85

7 9, 19, 66, 67, 71, 73, 74, 76, 84, 86

8 2, 9, 19, 21, 31, 32, 34, 35, 36, 41, 45, 46, 47, 56, 58, 66, 68, 74, 78, 80, 84, 85, 86

9 2, 7, 8, 19, 21, 35, 46, 47, 56, 58, 66, 67, 68, 74, 76, 78, 80, 84, 85, 86

10 11, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 70, 71, 72, 73, 74, 82, 83, 86

NAME_2 ID_3 NAME_3 ENGTYPE_3 County GDPPC

1 Changde 21098 Anxiang County Anxiang 23667

2 Changde 21100 Hanshou County Hanshou 20981

3 Changde 21101 Jinshi County City Jinshi 34592

4 Changde 21102 Li County Li 24473

5 Changde 21103 Linli County Linli 25554

6 Changde 21104 Shimen County Shimen 27137

7 Changsha 21109 Liuyang County City Liuyang 63118

8 Changsha 21110 Ningxiang County Ningxiang 62202

9 Changsha 21111 Wangcheng County Wangcheng 70666

10 Chenzhou 21112 Anren County Anren 12761

geometry

1 POLYGON ((112.0625 29.75523...

2 POLYGON ((112.2288 29.11684...

3 POLYGON ((111.8927 29.6013,...

4 POLYGON ((111.3731 29.94649...

5 POLYGON ((111.6324 29.76288...

6 POLYGON ((110.8825 30.11675...

7 POLYGON ((113.9905 28.5682,...

8 POLYGON ((112.7181 28.38299...

9 POLYGON ((112.7914 28.52688...

10 POLYGON ((113.1757 26.82734...Deriving contiguity weights: Queen Method

wm_q <- hunan_GDPPC %>%

mutate(nb = st_contiguity(geometry),

wt = st_weights(nb,

style="W"),

.before = 1) # to put them in the frontStarting from a binary neighbours list, in which regions are either listed as neighbours or are absent (thus not in the set of neighbours for some definition), the function adds a weights list with values given by the coding scheme style chosen:

B is the basic binary coding, W is row standardised (sums over all links to n), C is globally standardised (sums over all links to n), U is equal to C divided by the number of neighbours (sums over all links to unity), while S is the variance-stabilizing coding scheme proposed by Tiefelsdorf et al. 1999, p. 167-168 (sums over all links to n).

If zero policy is set to TRUE, weights vectors of zero length are inserted for regions without neighbour in the neighbours list. These will in turn generate lag values of zero, equivalent to the sum of products of the zero row t(rep(0, length=length(neighbours))) %*% x, for arbitraty numerical vector x of length length(neighbours). The spatially lagged value of x for the zero-neighbour region will then be zero, which may (or may not) be a sensible choice.

Distance-based Weights

There are three popularly used distance-based spatial weights, they are:

fixed distance weights,

adaptive distance weights, and

inverse distance weights (IDW).

Deriving fixed distance weights

Before we can derive the fixed distance weights, we need to determine the upper limit for distance band by using the steps below:

geo <- sf::st_geometry(hunan_GDPPC)

nb <- st_knn(geo, longlat = TRUE)

dists <- unlist(st_nb_dists(geo, nb))st_nb_dists()of sfdep is used to calculate the nearest neighbour distance. The output is a list of distances for each observation’s neighbors list.unlist()of Base R is then used to return the output as a vector so that the summary statistics of the nearest neighbour distances can be derived.

Now, we will go ahead to derive summary statistics of the nearest neighbour distances vector (i.e. dists) by usign the coced chunk below.

summary (dists) Min. 1st Qu. Median Mean 3rd Qu. Max.

21.56 29.11 36.89 37.34 43.21 65.80 The summary statistics report above shows that the maximum nearest neighbour distance is 65.80km. By using a threshold value of 66km will ensure that each area will have at least one neighbour.

Now we will go ahead to compute the fixed distance weights by using the code chunk below.

wm_fd <- hunan_GDPPC %>%

mutate(nb = st_dist_band(geometry,

upper = 66),

wt = st_weights(nb),

.before = 1)Deriving Adaptive Distance Weights

wm_ad <- hunan_GDPPC %>%

mutate(nb = st_knn(geometry,

k=8),

wt = st_weights(nb),

.before = 1)st_knn()of sfdep is used to identify neighbors based on k (i.e. k = 8 indicates the nearest eight neighbours). The output is a list of neighbours (i.e. nb).st_weights()is then used to calculate polygon spatial weights of the nb list. Note that:the default

styleargument is set to “W” for row standardized weights, andthe default

allow_zerois set to TRUE, assigns zero as lagged value to zone without neighbors.

Derive Inverse Distance Weights

wm_idw <- hunan_GDPPC %>%

mutate(nb = st_contiguity(geometry),

wts = st_inverse_distance(nb, geometry,

scale = 1,

alpha = 1),

.before = 1)st_contiguity()of sfdep is used to identify the neighbours by using contiguity criteria. The output is a list of neighbours (i.e. nb).st_inverse_distance()is then used to calculate inverse distance weights of neighbours on the nb list.

Computing Global Moran’s I

In the code chunk below, global_moran() function is used to compute the Moran’s I value. Different from spdep package, the output is a tibble data.frame.

moranI <- global_moran(wm_q$GDPPC,

wm_q$nb,

wm_q$wt)

glimpse(moranI)List of 2

$ I: num 0.301

$ K: num 7.64Performing Global Moran’s I test

In general, Moran’s I test will be performed instead of just computing the Moran’s I statistics. With sfdep package, Moran’s I test can be performed by using global_moran_test() as shown in the code chunk below.

global_moran_test(wm_q$GDPPC,

wm_q$nb,

wm_q$wt)

Moran I test under randomisation

data: x

weights: listw

Moran I statistic standard deviate = 4.7351, p-value = 1.095e-06

alternative hypothesis: greater

sample estimates:

Moran I statistic Expectation Variance

0.300749970 -0.011494253 0.004348351 Performing Global Moran’I permutation test

In practice, monte carlo simulation should be used to perform the statistical test. For sfdep, it is supported by globel_moran_perm()

It is always a good practice to use set.seed() before performing simulation. This is to ensure that the computation is reproducible.

set.seed(1234)

global_moran_perm(wm_q$GDPPC,

wm_q$nb,

wm_q$wt,

nsim = 99)

Monte-Carlo simulation of Moran I

data: x

weights: listw

number of simulations + 1: 100

statistic = 0.30075, observed rank = 100, p-value < 2.2e-16

alternative hypothesis: two.sidedThe numbers of simulation is alway equal to nsim + 1. This mean in nsim = 99. This mean 100 simulation will be performed.

Local Measures of Spatial Association - sfdep methods

Using sfdep - calculate LISA

To calculate LISAs we typically will provide a numeric object(s), a neighbor list, and a weights list–and often the argument nsim to determine the number of simulations to run. Most LISAs return a data frame of the same number of rows as the input dataframe. The resultant data frame can be unnested, or columns hoisted for ease of analysis.

For example to calculate the Local Moran we use the function local_moran()

lisa <- wm_q %>%

mutate(local_moran = local_moran(

GDPPC, nb, wt, nsim = 999),

.before = 1) %>%

unnest(local_moran)

#unnest to create individual columns The output of local_moran() is a sf data.frame containing the columns ii, eii, var_ii, z_ii, p_ii, p_ii_sim, and p_folded_sim.

ii: local moran statistic

eii: expectation of local moran statistic; for localmoran_permthe permutation sample means

var_ii: variance of local moran statistic; for localmoran_permthe permutation sample standard deviations

z_ii: standard deviate of local moran statistic; for localmoran_perm based on permutation sample means and standard deviations p_ii: p-value of local moran statistic using pnorm(); for localmoran_perm using standard deviatse based on permutation sample means and standard deviations p_ii_sim: For

localmoran_perm(),rank()andpunif()of observed statistic rank for [0, 1] p-values usingalternative=-p_folded_sim: the simulation folded [0, 0.5] range ranked p-value (based on https://github.com/pysal/esda/blob/4a63e0b5df1e754b17b5f1205b cadcbecc5e061/esda/crand.py#L211-L213)skewness: For

localmoran_perm, the output of e1071::skewness() for the permutation samples underlying the standard deviateskurtosis: For

localmoran_perm, the output of e1071::kurtosis() for the permutation samples underlying the standard deviates.

Visualising LISA

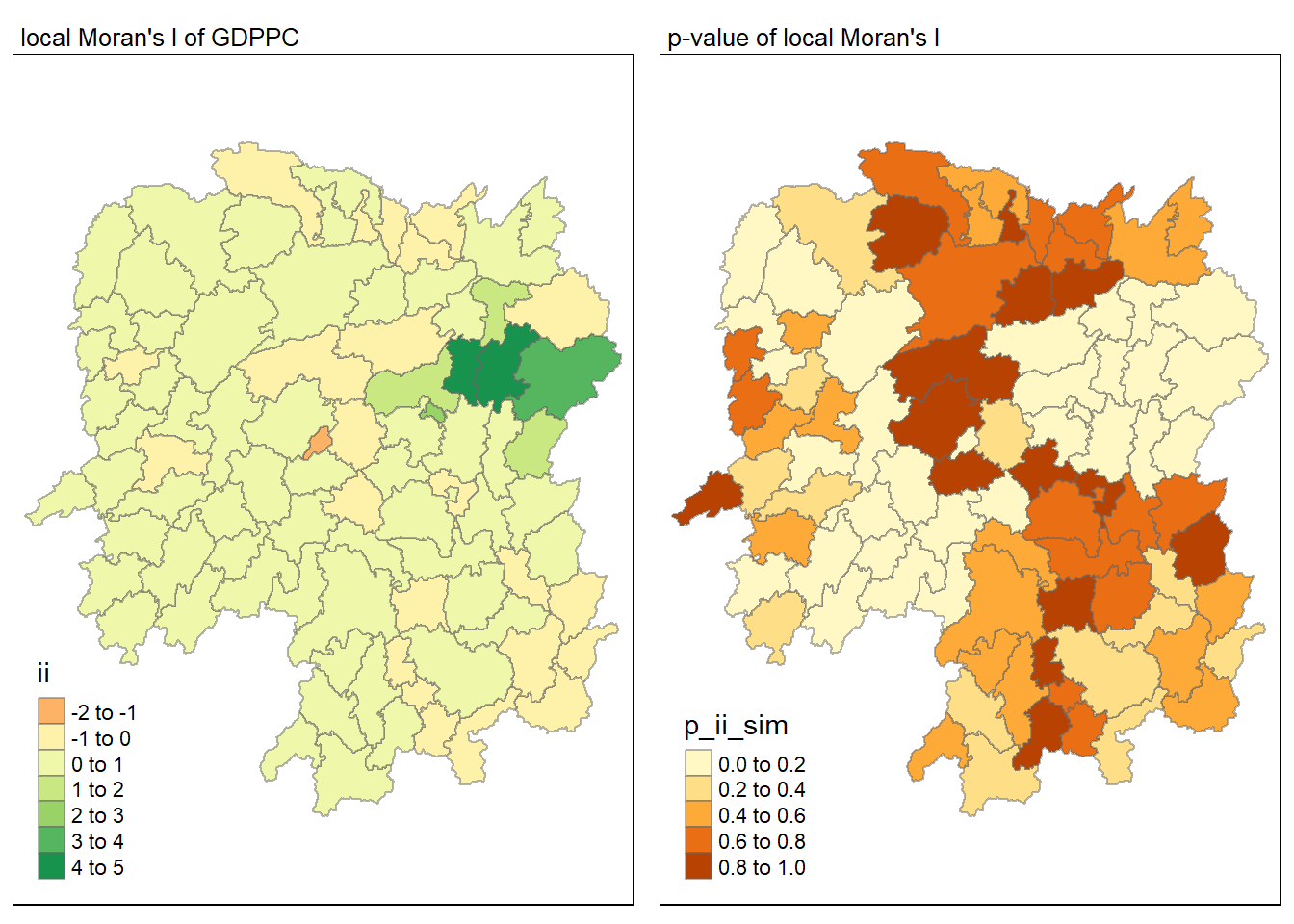

Visualising local Moran’s I and p-value

tmap_mode("plot")

map1<- tm_shape(lisa) +

tm_fill("ii") +

tm_borders(alpha = 0.5) +

tm_view(set.zoom.limits = c(6,8)) +

tm_layout(main.title = "local Moran's I of GDPPC",

main.title.size = 0.8)

map2 <- tm_shape(lisa) +

tm_fill("p_ii_sim") +

tm_borders(alpha = 0.5) +

tm_layout(main.title = "p-value of local Moran's I",

main.title.size = 0.8)

tmap_arrange(map1, map2, ncol = 2)

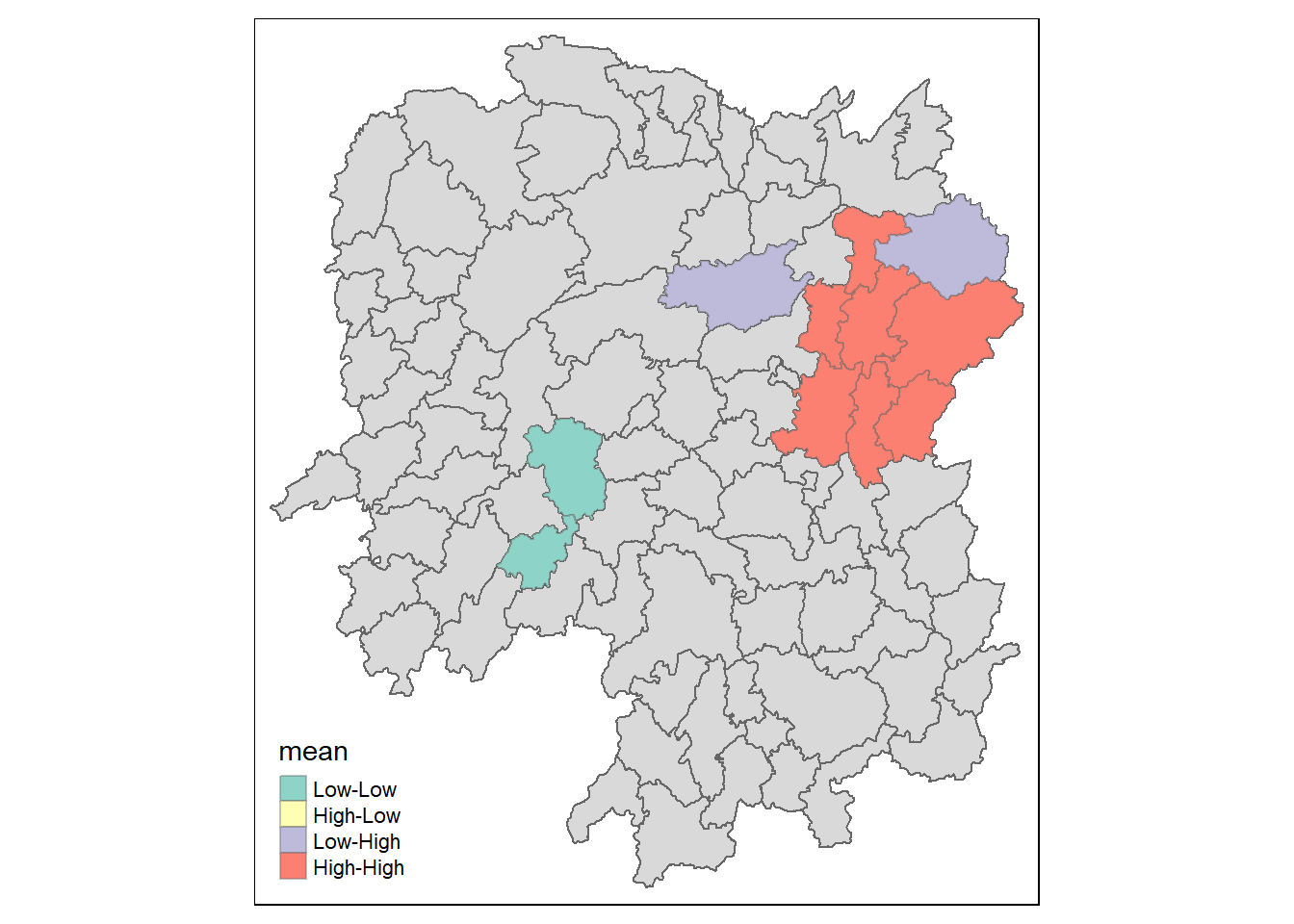

Visualising LISA map

LISA map is a categorical map showing outliers and clusters. There are two types of outliers namely: High-Low and Low-High outliers. Likewise, there are two type of clusters namely: High-High and Low-Low cluaters. In fact, LISA map is an interpreted map by combining local Moran’s I of geographical areas and their respective p-values.

In lisa sf data.frame, we can find three fields contain the LISA categories. They are mean, median and pysal. In general, classification in mean will be used as shown in the code chunk below.

lisa_sig <- lisa %>%

filter(p_ii < 0.05)

tmap_mode("plot")

tm_shape(lisa) +

tm_polygons() +

tm_borders(alpha = 0.5) +

tm_shape(lisa_sig) +

tm_fill("mean") +

tm_borders(alpha = 0.4)

Time-Series - ESHA

Import the packages

plotly - makes the graph interactive - useful for time series.

pacman::p_load(sf, sfdep, tmap, tidyverse, knitr, plotly)Import the Time Series Data

Hunan_GDPPC by years - comprising data of the GDPPC in 2005, 2006 and 2007 by the County.

The time data field must be in Integers and not character.

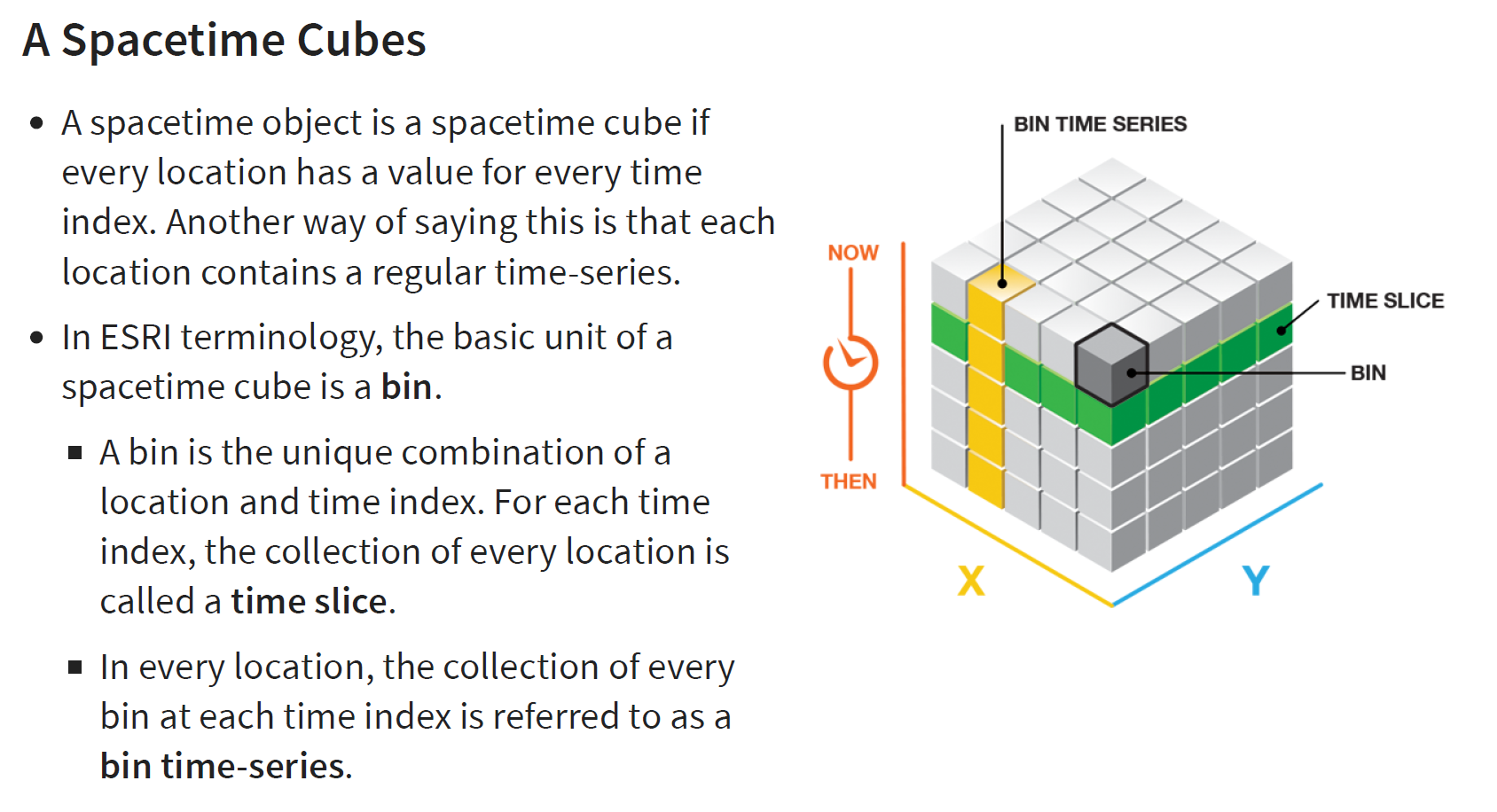

GDPPC <- read_csv("data/aspatial/Hunan_GDPPC.csv")Creating a Time Series Cube - Space Time Cube

Before getting started, students must read this article to learn the basic concept of spatio-temporal cube and its implementation in sfdep package.

In the code chunk below, spacetime() of sfdep is used to create an spacetime cube.

Next, is_spacetime_cube() of sfdep package will be used to varify if GDPPC_st is indeed an space-time cube object.

hunan_spt <- spacetime(GDPPC, hunan,

.loc_col = "County",

.time_col = "Year")

is_spacetime_cube(hunan_spt)[1] TRUEComputing GI*

Deriving the Spatial Weights

activate()of dplyr package is used to activate the geometry contextmutate()of dplyr package is used to create two new columns nb and wt.Then we will activate the data context again and copy over the nb and wt columns to each time-slice using

set_nbs()andset_wts()- row order is very important so do not rearrange the observations after using

set_nbs()orset_wts().

- row order is very important so do not rearrange the observations after using

GDPPC_nb <- hunan_spt %>%

activate("geometry") %>%

mutate(nb = include_self(st_contiguity(geometry)),

wt = st_inverse_distance(nb, geometry, scale = 1, alpha = 1),

.before = 1) %>%

set_nbs("nb") %>%

set_wts("wt")We can use these new columns to manually calculate the local Gi* for each location. We can do this by grouping by Year and using local_gstar_perm() of sfdep package. After which, we use unnest() to unnest gi_star column of the newly created gi_starts data.frame.

gi_stars <- GDPPC_nb %>%

group_by(Year) %>%

mutate(gi_star = local_gstar_perm(GDPPC, nb, wt)) %>%

tidyr::unnest(gi_star)Mandall Kendell Test

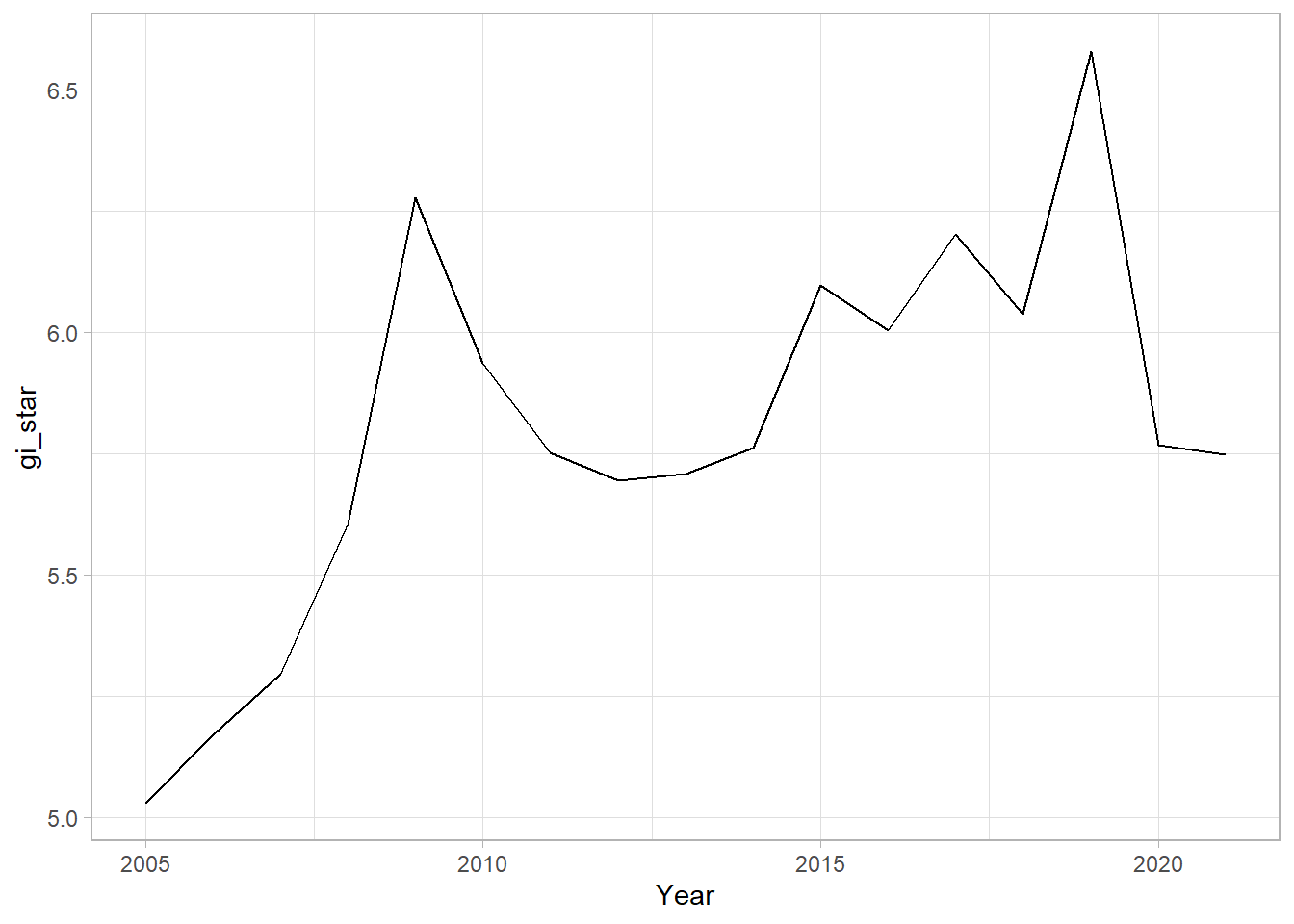

With these Gi* measures we can then evaluate each location for a trend using the Mann-Kendall test. The code chunk below uses Changsha county.

cbg <- gi_stars |>

ungroup() |>

filter(County == "Changsha") |>

select(County, Year, gi_star)Plot - graph:

ggplot(cbg, aes(Year, gi_star)) +

geom_line() +

theme_light()

Plotting using an interactive plot

Using plotly package:

p <- ggplot(data = cbg,

aes(x = Year,

y = gi_star)) +

geom_line() +

theme_light()

ggplotly(p)cbg %>%

summarise(mk = list(

unclass(

Kendall::MannKendall(gi_star)))) %>%

tidyr::unnest_wider(mk)# A tibble: 1 x 5

tau sl S D varS

<dbl> <dbl> <dbl> <dbl> <dbl>

1 0.485 0.00742 66 136. 589.In the above result, sl is the p-value. This result tells us that there is a slight upward but insignificant trend.

We can replicate this for each location by using group_by() of dplyr package.

ehsa <- gi_stars %>%

group_by(County) %>%

summarise(mk = list(

unclass(

Kendall::MannKendall(gi_star)))) %>%

tidyr::unnest_wider(mk)Arranging to show significant hot/cold spots.

emerging <- ehsa %>%

arrange(sl, abs(tau)) %>%

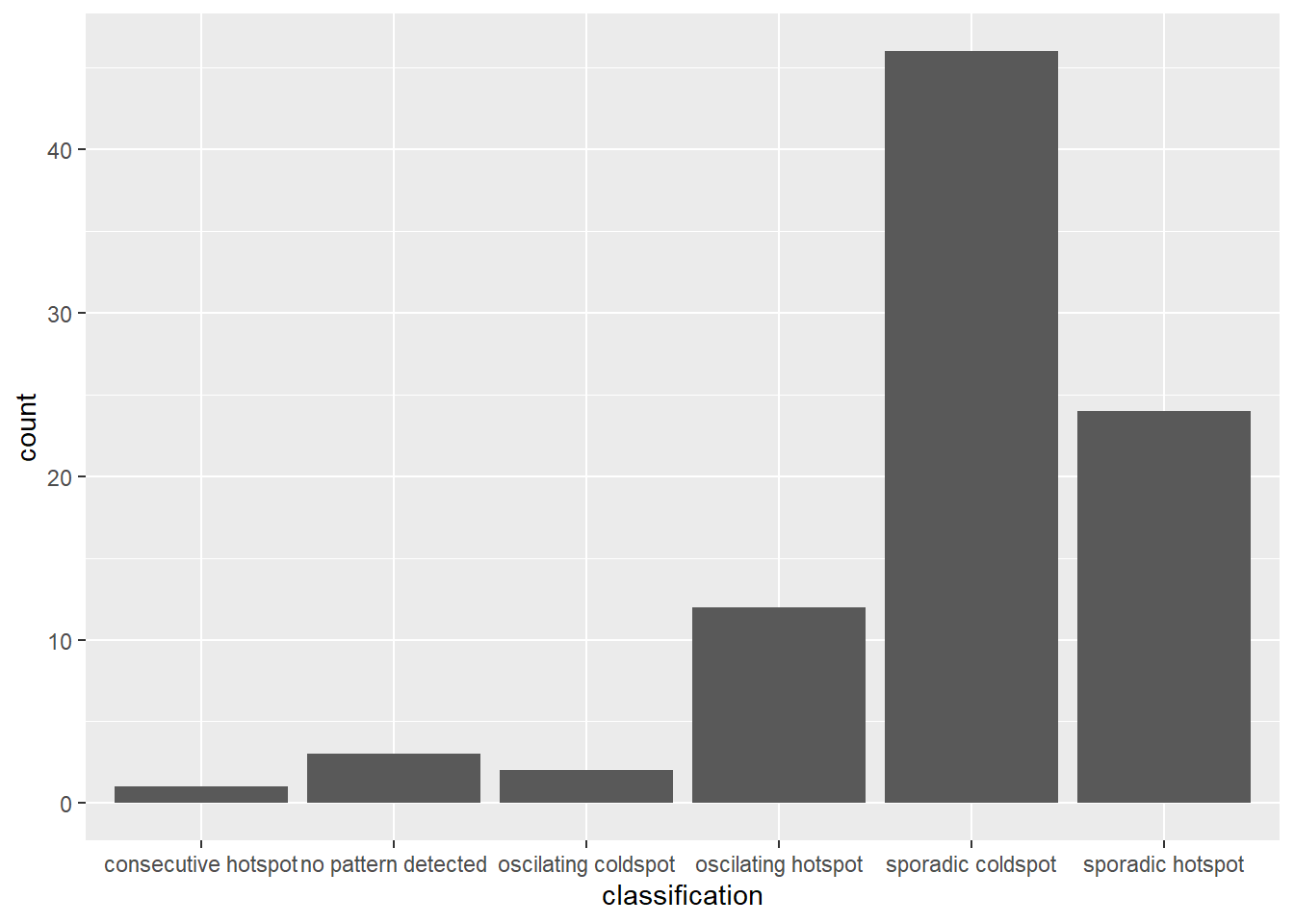

slice(1:10)EHSA

In EHSA we can—and likely should—incorporate the time-lag of our spatial neighbors. Secondly, there are classifications proposed by ESRI which help us understand how each location is changing over time. Both of these are handled by the emerging_hotspot_analysis() function.

This emerging_hotspot_analysis() takes a spacetime object x, and the quoted name of the variable of interested in .var at minimum. We can specify the number of time lags using the argument k which is set to 1 by default.

ehsa <- emerging_hotspot_analysis(

x = hunan_spt,

.var = "GDPPC",

k = 1,

nsim = 99

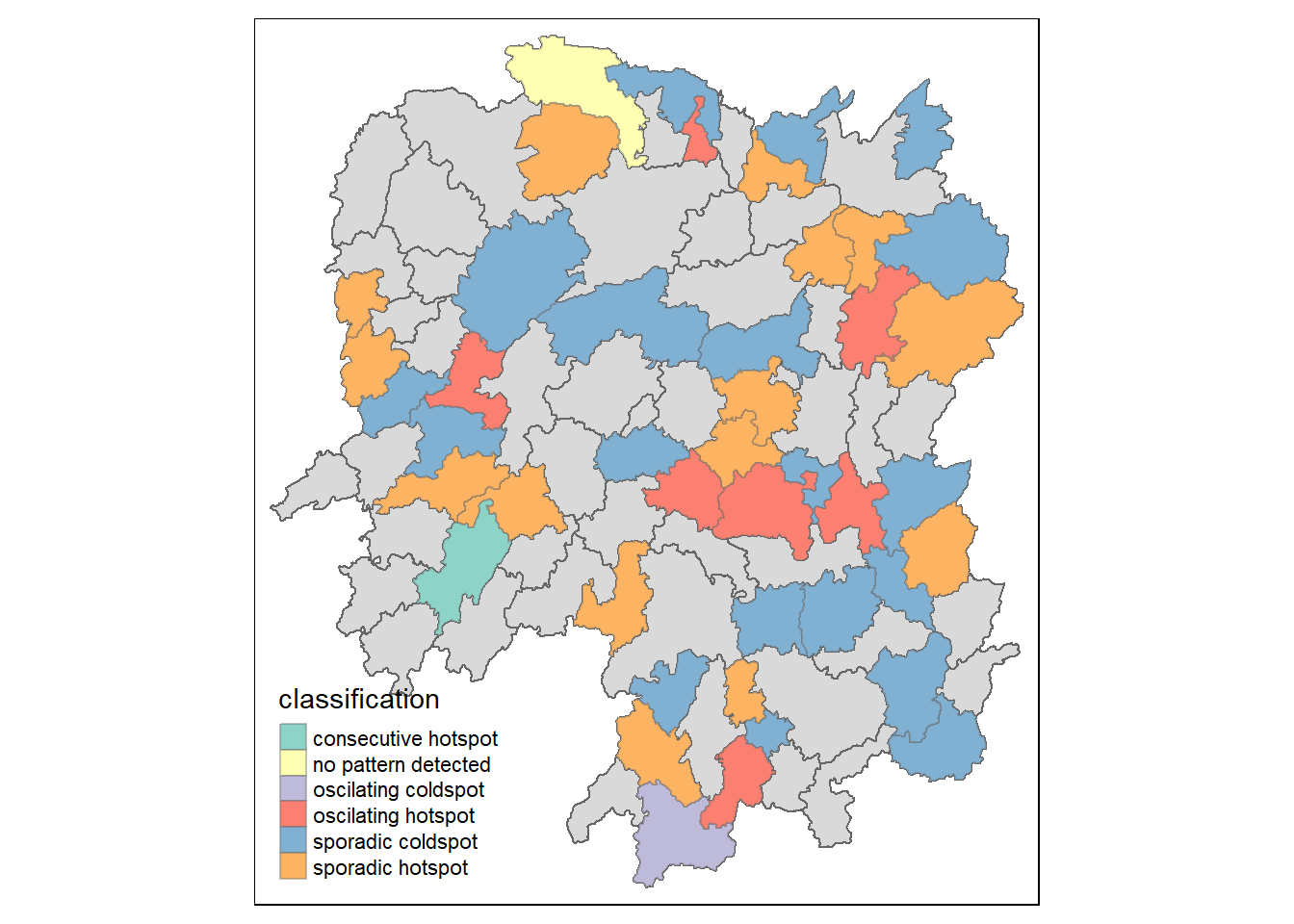

)count(ehsa, classification)# A tibble: 6 x 2

classification n

<chr> <int>

1 consecutive hotspot 1

2 no pattern detected 3

3 oscilating coldspot 2

4 oscilating hotspot 12

5 sporadic coldspot 46

6 sporadic hotspot 24We can plot to reveal the distribution instead.

ggplot(data = ehsa,

aes(x = classification)) +

geom_bar()

Visualising EHSA Data

To visualised the EHSA data - we need to join it with the geospatial data.

hunan_ehsa <- hunan %>%

left_join(ehsa,

by = join_by(County == location))Then, we can use the tmap function to plot.

ehsa_sig <- hunan_ehsa %>%

filter(p_value < 0.05)

tmap_mode("plot")

tm_shape(hunan_ehsa) +

tm_polygons() +

tm_borders(alpha = 0.5) +

tm_shape(ehsa_sig) +

tm_fill("classification") +

tm_borders(alpha = 0.4)